AI, Lending 6 min read

The future of credit underwriting under AI regulation: Implications for the EU and beyond

In today’s lending landscape, it is common practice for lenders to enhance borrowers' external credit scores using their own metrics, advanced statistical models, or heuristics to make credit decisions.

However, this approach is now on the brink of tighter regulation as the EU’s first Artificial Intelligence (AI) Act is about to come into play. With new regulations on the horizon, two crucial questions arise for lenders:

1. How will credit underwriting activities be classified under the proposed AI Act?

2. What new regulations do lenders need to be aware of once the act comes into effect?

Maximilian Eber, CPTO at Taktile, and Philipp Hacker, Professor of Law and Technology at European University Viadrina Frankfurt and policy advisor on the AI Act (at the EU level), have joined forces to answer these questions.

In this article, they summarize the proposed AI Act and deep dive into the potential implications of upcoming new regulations for lenders - even for those that do not use AI in the strict technical sense.

Key takeaways

- The AI Act applies to a broad range of technologies, including not only advanced machine learning but also complex software and decision-making

- The AI Act classifies credit underwriting as a “high-risk” activity that will be subject to additional regulation

- For some of the new AI rules, existing compliance with banking regulations and guidelines will suffice; however, for other new rules, banking compliance will not be enough

- Going forward, to maintain compliance with the AI Act, it will be crucial for lenders to have sound and transparent underwriting infrastructure, especially for automated decision-making

What is the Artificial Intelligence (AI) Act?

The AI Act is the EU’s flagship legislation regulating the development and deployment of AI systems in many sectors of society, ranging from business applications like credit scoring and insurance to administration and law enforcement.

It aims to regulate the specific risks of AI – such as opacity, discrimination, unforeseeable behavior, or lack of predictive quality – by subjecting the developers and users of these models to several specific legal obligations.

The Act formalizes and adds to best practices often used in the industry, bringing specific monitoring, transparency, documentation, human oversight, and data governance rules to the game.

There are currently three different versions of the AI Act:

- In April 2021, the first version was proposed by the EU Commission

- In December 2022, the Council of the EU, representing Member States, issued its opinion, the so-called General Approach

- In June 2023, the European Parliament adopted its position

Right now, these three actors are negotiating behind closed doors in a process called the trilogue. The final version of the AI Act is expected around the end of this year, and most of its rules will apply from around mid-2026 onwards.

However, due to its broad sweep and extensive duties, companies using AI or any complex decision models should prepare for AI Act compliance much sooner.

As you will see further below, the AI Act is expected to apply to developers and users of almost any complex decision-making process – irrespective of whether they are using neural networks, generative AI, or any other advanced machine learning technology.

The AI Act's lens on credit underwriting as a high-risk use case

The AI Act contains four different risk categories for AI models based on the use cases in which the system is deployed:

1. Prohibited uses

2. High-risk uses

3. Limited-risk uses

4. Unregulated uses

Prohibited risk concerns use cases such as “social scoring” or remote biometric identification in public spaces (with limited exceptions). However, most of the AI Act covers high-risk use cases, ranging from AI applications in migration or criminal justice contexts to economic use cases in medicine, employment insurance – and credit scoring.

Any other AI systems still interacting with humans are considered limited risk; the main obligation here is that human counterparts must be notified that they are dealing with an AI system.

Credit scoring is one of the main economic use cases singled out as high-risk under the AI Act’s framework.

However, two exceptions are contemplated, in which case credit scoring would not be considered high-risk. The original Commission proposal and the Council version would exempt SMEs if they use the credit scoring model for their own business. But, the European Parliament does not want to spare SMEs – it instead intends to exempt systems used for financial fraud detection. Hence, it is crucial to closely monitor the final version of the AI Act to know which exemptions, if any, have been added to credit scoring.

When does the AI Act apply to credit scoring?

Importantly, the AI Act only applies if the credit scoring model falls under the AI definition.

The AI Act’s broad definition of AI includes not only advanced machine learning, such as deep learning for generative AI – but also statistical models, as they are typically used in credit scoring. Automated decision systems, composed of external scores, internal statistical models, heuristics, and rules, also likely qualify as AI under the AI Act’s definition.

To pick up from the example in the introduction, imagine again that, to reach a credit decision, a bank uses an external credit score, enriches it with some metrics of its own, and uses some statistical modeling, heuristics, or rules. Does this amount to the use of AI under the AI Act? Most likely, yes.

The definition of AI has yet to be finalized, and considerable disagreement exists in its meaning. However, the Commission proposal and the Council version of the act currently include “statistical techniques for learning and inference […] and search and optimization methods”.

Interestingly, the European Parliament wants to cover “knowledge-based approaches, Bayesian estimation or decision-trees” in addition to classical machine learning processes. If this were the case, the AI Act could best be thought of as the “Complex Decision-Making Act”.

It is also important to note that being based outside the EU does not spare companies covered by the AI Act. Like the GDPR, the Act will apply once “AI-based” services are offered or models are used concerning any EU customer. It is even enough for the output of the system to be used in the EU to fall under the AI Act.

How will the AI Act affect credit underwriting in the future?

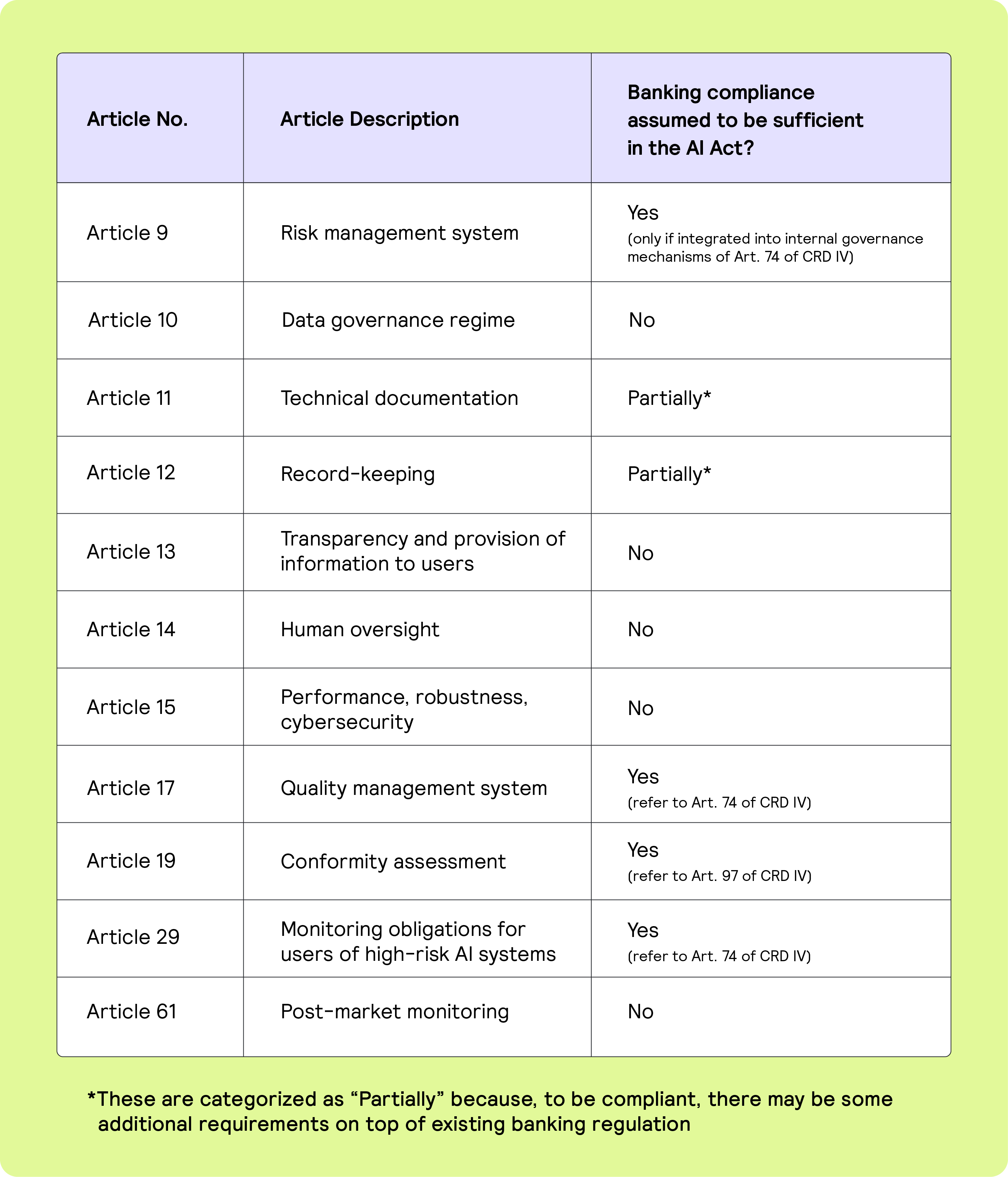

Although the AI Act often refers to existing banking regulations in various places, several additional articles and obligations are proposed.

Below is a brief overview of key provisions from the AI Act fintech lenders and financial institutions should be aware of.

Key provisions of the AI Act affecting credit underwriting

You can find out more details of these provisions here (see further information).

Data Governance: A critical provision affecting credit underwriting

Providers of AI systems will need to implement a data governance regime ensuring high-quality, non-discriminatory, and representative training data.

For lenders, this means data needs to be cleaned up and representative of customer segments. And they need to be able to certify that their data aligns with these new data standards – all of which come on top of existing banking regulations.

In addition, lenders will need to introduce sweeping documentation and record-keeping procedures. Models need to be sufficiently transparent and operated with human oversight. And, importantly, they must comply with demanding performance, robustness, and cybersecurity standards.

These obligations are independent of – and added on top of – existing banking regulation. While the European Parliament did introduce some language into the AI Act seeking to enhance the reliance on existing sectoral regulation – such as banking law – it is unclear to what extent such references will make it into the final version of the AI Act.

Opportunities for lenders under AI regulation

Once the AI Act comes into force, lenders will have some legal certainty around how to operate when using systems that leverage AI.

They will also have the opportunity to build extended levels of trust with their customers.

For regulated credit institutions, a conformity assessment procedure for the AI Act will be integrated into the supervisory review and evaluation procedure provided by banking law. In addition, lenders can self-apply for a certification that confirms to customers they operate with ”AI Act compliant” safety and expertise when it comes to storing and using data.

What to expect next

The AI Act will be finalized over the following months – likely until the end of 2023. One of the crucial points negotiators still have to agree upon is the transition period between the publication of the AI Act in the EU’s Official Journal and its application to industry.

Preparation now will ensure successful compliance in the future

For credit scoring, the AI Act will likely take effect two years after its publication, around mid-2026.

However, modeling, compliance, and management systems need to be updated starting now to ensure the timely implementation of AI Act-compliant functionalities. Irrespective of the final version, having robust and agile infrastructure in place – especially regarding data governance – for making automated credit decisions will be more important than ever.

Being agile will make it easier to adjust to changing regulations

The European Commission will also receive a mandate to update the AI Act regularly and when it seems fit. This will likely apply mainly to the list of high-risk use cases, which may be expanded. Importantly, SMEs (defined as companies with less than 250 employees and either a turnover of less than €50 million or a balance sheet of under €43 million) might be exempted from some high-risk obligations – depending on the outcome of the trilogue negotiations.

For the EU, the AI Act will remain a key interest for legislators, and one may expect swift updates if new developments arise on the technological or policy front.

One significant field to watch out for is standardization. Many of the rather vague terms of the AI Act – such as representativeness and freedom from error mentioned under data governance – will be defined more precisely in standards being developed right now by standard-setting organizations.

These standards will hopefully offer a certain safe harbor to companies using AI or making complex decisions, if they can translate them into their models and procedures for credit scoring. Hence, any future-proof credit underwriting process should closely monitor the developments around the EU AI Act and its standardization endeavors.

Disclaimer

This information provided in this article does not, and is not intended to constitute professional or legal advice; instead, all information, content, and material are for general informational and educational purposes only. Accordingly, before taking any actions based upon such information, we encourage you to consult with the appropriate professionals.

Frequently Asked Questions (FAQs)

Q: What is the EU Artificial Intelligence (AI) Act?

A: The EU Artificial Intelligence Act is the first comprehensive AI regulation, classifying credit underwriting as a high-risk AI use case. It introduces strict requirements for transparency, data governance, and human oversight in automated decision-making.

Q: How will the AI Act impact credit underwriting?

A: Credit underwriting models using credit scores, statistical models, or AI algorithms will face new compliance obligations. Lenders must document decisions, ensure data quality, and maintain explainable and auditable underwriting processes.

Q: Does the AI Act apply outside the EU?

A: Yes. Similar to GDPR, the AI Act applies to any lender offering credit services to EU customers, even if the company is based outside the EU. Global lenders need to prepare now to remain compliant.

Q: What steps should lenders take to prepare for AI regulation?

A: Lenders should start by strengthening data governance, credit decision infrastructure, and model transparency. Using flexible, automated platforms helps ensure readiness for AI Act compliance.

Q: How can Taktile help with AI-compliant credit underwriting?

A: Taktile provides a low-code Decision Platform with built-in audit trails, seamless data integrations, and explainable AI tools. Request a demo to see how Taktile enables lenders to stay agile and compliant under the EU AI Act.